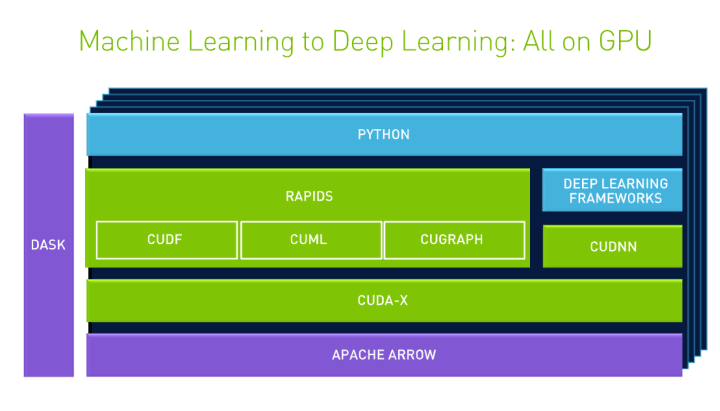

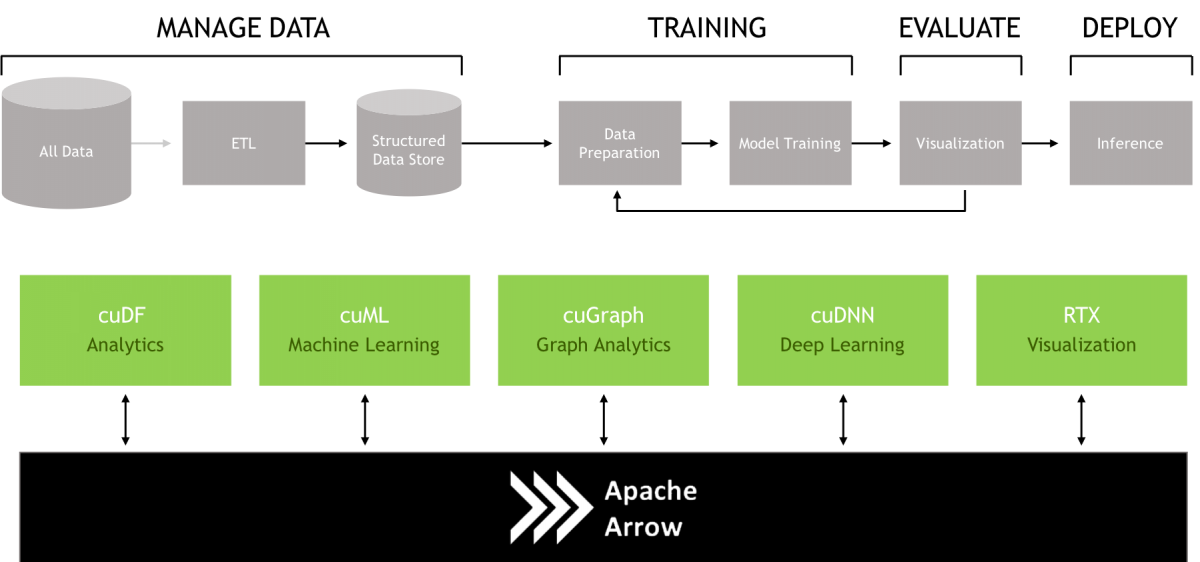

RAPIDS is an open source effort to support and grow the ecosystem of... | Download Scientific Diagram

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

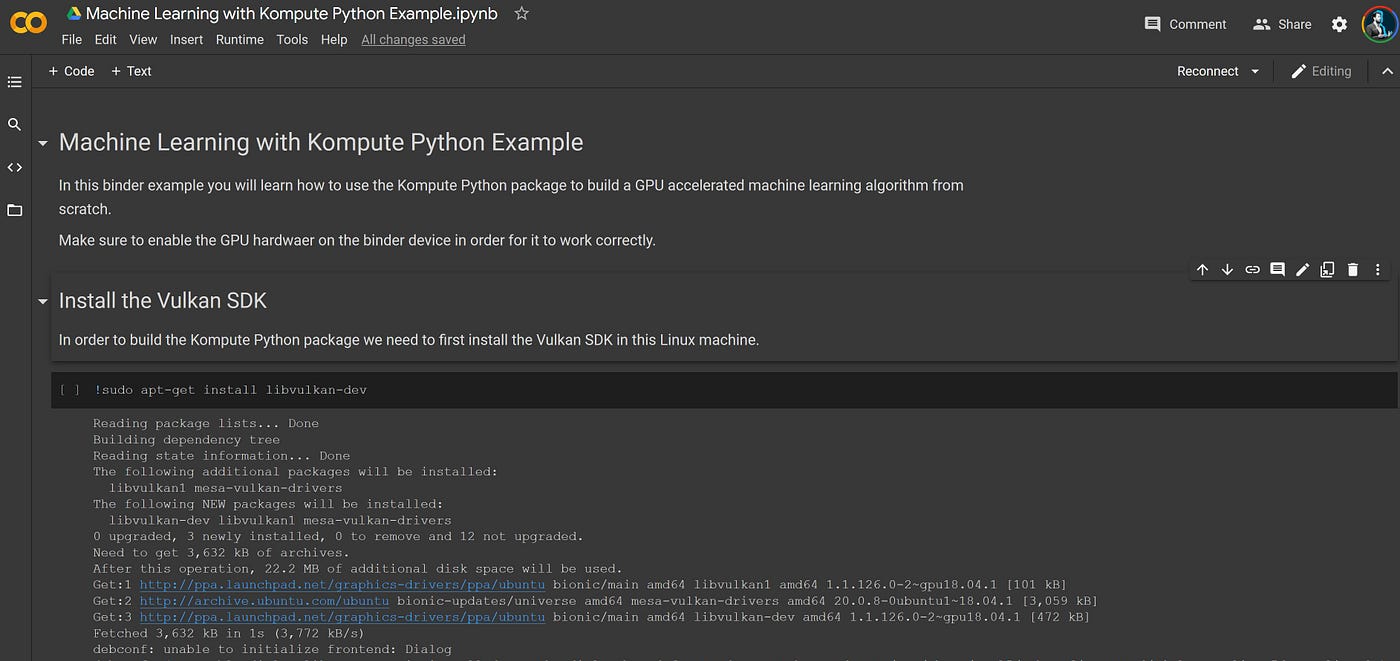

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

GPU parallel computing for machine learning in Python: how to build a parallel computer , Takefuji, Yoshiyasu, eBook - Amazon.com

Best Python Libraries for Machine Learning and Deep Learning | by Claire D. Costa | Towards Data Science

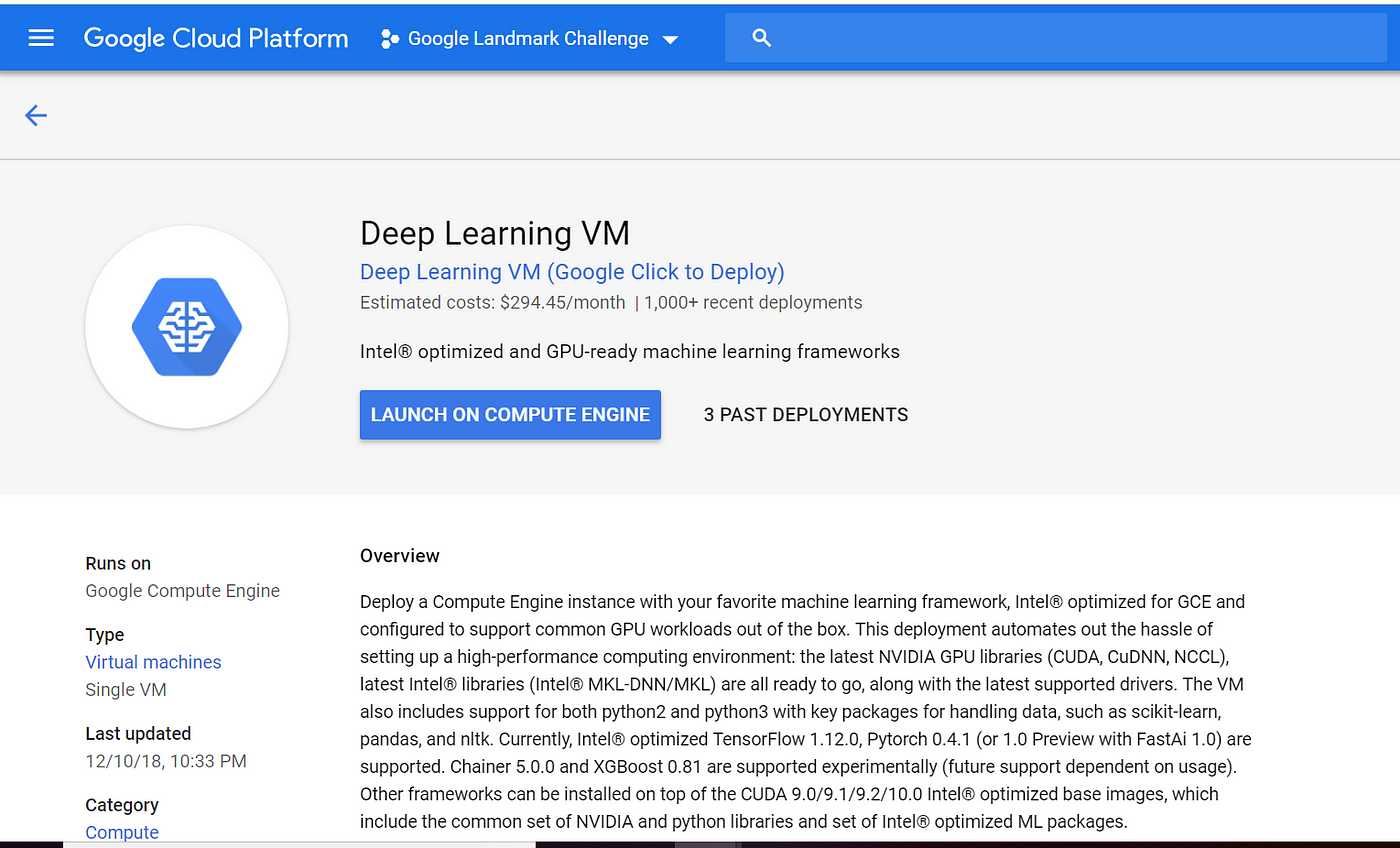

How to run Deep Learning models on Google Cloud Platform in 6 steps? | by Abhinaya Ananthakrishnan | Google Cloud - Community | Medium

Amazon.com: Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems: 9781789341072: Bandyopadhyay, Avimanyu: Books

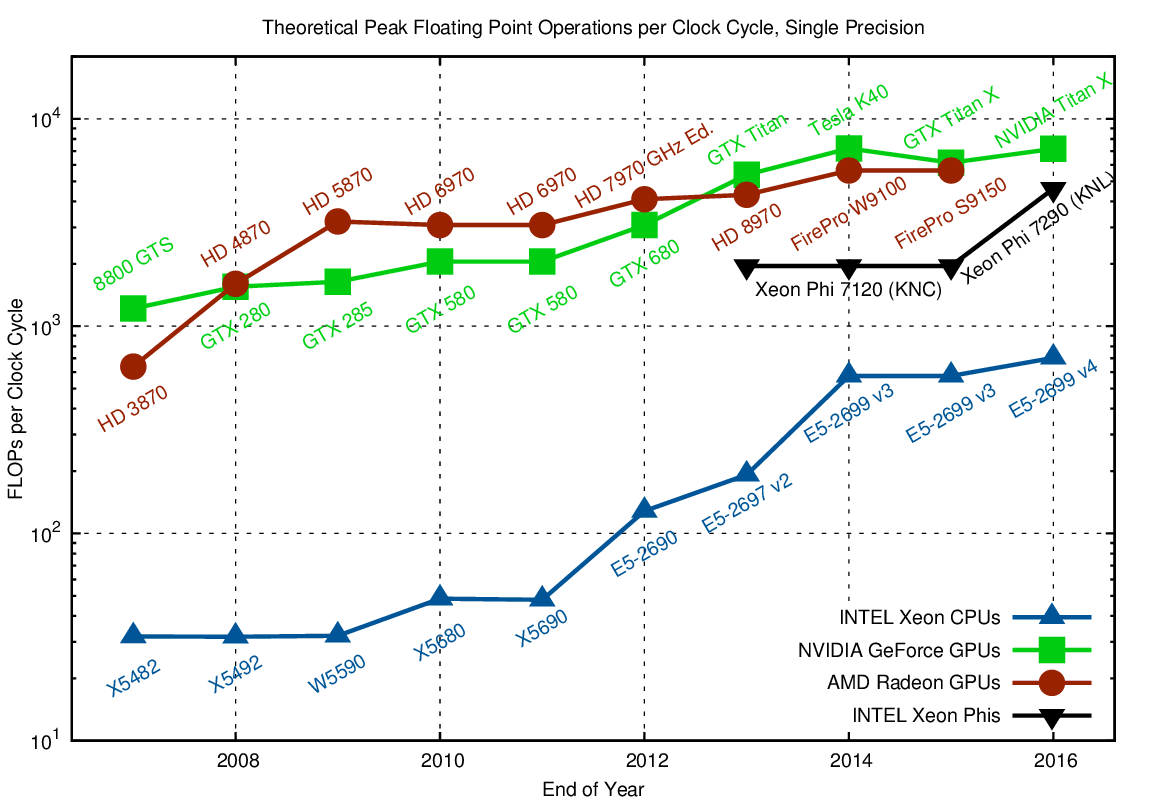

NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI